In the rapidly evolving landscape of software development, the term "DevOps" has gained significant prominence.

DevOps, short for combination of work and efforts from Development teams and Operations teams, represents a collaborative and holistic approach to software development and deployment...

**Understanding DevOps:**

**Key Principles of DevOps:**

1. **Collaboration:**DevOps encourages open communication and cooperation between developers, testers, and operations teams. This helps in identifying and addressing potential problems early in the development process.

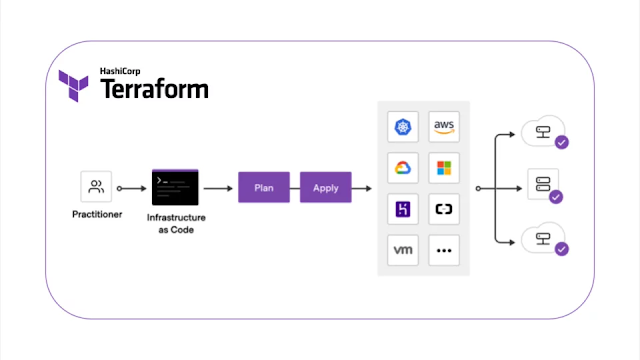

2. **Automation:** Automation is a core principle of DevOps. By automating tasks like testing, deployment, and infrastructure provisioning, teams can reduce human errors, improve efficiency, and ensure consistent processes.

e.g. Example of DevOps LifeCycle - planning your platform and mapping out what you need to accomplish at each step

3. **Continuous Integration (CI):**CI involves integrating code changes from multiple developers into a shared repository several times a day. This ensures that new code is regularly tested and merged, reducing integration issues and improving software quality.

4. **Continuous Delivery (CD):** CD builds upon CI by automating the deployment process. It allows for the rapid and reliable release of software updates to production environments, minimising manual interventions and reducing deployment risks.

5. **Monitoring and Feedback:**DevOps emphasises real-time monitoring of applications and infrastructure. This helps teams identify performance bottlenecks, security vulnerabilities, and other issues, enabling quick remediation.

e.g - Of DevOps Lifecycle

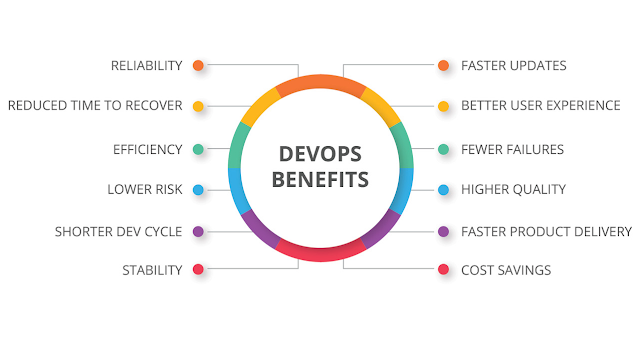

**Benefits of DevOps:**

1. **Faster Time to Market:** DevOps practices enable quicker development cycles and faster release of features or updates, allowing businesses to respond to market demands more effectively.

2. **Improved Collaboration:** DevOps breaks down barriers between teams, fostering better understanding and cooperation, which ultimately leads to improved software quality.

3. **Enhanced Reliability:** Automation and continuous testing ensure that changes are thoroughly tested and consistently deployed, reducing the likelihood of failures in production environments.

4. **Scalability:** DevOps practices, combined with cloud technologies, allow applications to scale seamlessly according to demand.

5. **Higher Quality Software:**Continuous testing and feedback loops lead to higher software quality, as issues are identified and addressed early in the development process.